Who we are

Our team consists of five professionals with over 65 years of combined experience in technical fields. Our team members have worked at some of the biggest names in tech - Waymo, Google, Intel, Tesla and Nvidia. One of our team members has nearly 15 years of experience in retail, providing us with valuable insights into customer needs and preferences that help shape our product's development.

Furthermore, we are all data science graduate students at UC Berkeley, and we are working on this idea for our capstone project. We have gained significant knowledge in data science and machine learning over the past few years and we are eager to put our know-how into practice.

Melody Masis

Abhishek Shetty

Vijaykumar Krithivasan

Mehmet Inonu

Landon Austin Yurica

Our Mission

At Perceive AI, we believe accessibility is a right—not a luxury. Our goal is to remove barriers for the visually impaired, starting with one of the most essential tasks: grocery shopping.

What We Do

Perceive AI is an assistive shopping app that uses real-time grocery item recognition to guide users through stores with precision. Unlike conventional tools that flood users with unnecessary information, Perceive AI focuses solely on items the user actually needs—those on their shopping list.

Through clear directional audio guidance and a screen-reader-friendly interface, the app ensures a smooth, frustration-free experience. With built-in voice command functionality, users can interact hands-free and with full autonomy.

The Challenge

Grocery shopping shouldn’t require help.

Yet for millions of people with visual impairments, navigating produce aisles often means relying on others — or enduring clunky, noisy tech that bombards them with audio overload.

📉 Existing tools are either too limited or too loud.

💸 Visually impaired shoppers face third-party shopping fees averaging $48 per trip.

🧑🦯Over 300 million people worldwide live with visual impairment.

Our Solution: Perceive AI

A seamless way to shop with confidence.

Perceive AI is an intuitive mobile app that:

🛒 Uses voice commands to create your shopping list.

👓 Alerts you when you’re near a produce item from your list.

🧠 Combines powerful computer vision + LLMs to detect, confirm, and guide you to items in real-time.

Perceive AI MVP: Produce at Trader Joe's

Our Minimum Viable Product (MVP) targets the Trader Joe's produce section, addressing users' desire for independent shopping where online options are unavailable. The app features real-time product recognition integrated with the user's shopping list, prioritizing needed items. It delivers audio guidance and clearly announces found list items, enabling discrete headphone use based on user feedback. Crucially, the MVP ensures reliability with offline shopping list access for poor connectivity and incorporates an accessible interface supporting screen readers and voice commands.

Our App in Action

System Architecture

Upon app launch, the device connects via API Gateway, registering its unique device and websocket IDs for session management.

User Interaction & List: The user interacts with the mobile app (UI) to build a shopping list.

On-Device Object Detection: The app uses the device camera and an integrated YOLO model for real-time object detection from the video feed. It can filter detections based on the user's shopping list.

Cloud Upload (on Match): When a relevant object is detected (matching the list), the app (via Amplify) uploads the corresponding frame to an S3 bucket. The image file name includes the device ID and detected object.

Backend Processing Trigger: The S3 upload triggers a Lambda function.

Device Lookup: Lambda retrieves the device ID from the S3 object and queries a DynamoDB table to find the corresponding websocket connection ID.

AI Guidance Generation: Lambda sends the image and a predefined prompt to a multimodal LLM hosted on SageMaker. The LLM returns text-based guidance related to the detected object.

Response Delivery: Lambda uses the retrieved connection ID and API Gateway to send the LLM's text response back specifically to the originating device's websocket connection.

User Feedback: The mobile app receives the text data and provides audio feedback to the user.

This architecture enables real-time, on-device object detection filtered by user input, triggering cloud-based AI analysis via an LLM, and delivering targeted guidance back to the specific user's device without manual intervention for prompting the cloud AI.

Data Science Methods

Data Preparation

We collected and labeled over 4,000 images from Trader Joe’s locations. Recognizing that individual images often contained multiple distinct produce items, we approached this as a multi-label dataset problem. This characteristic presents a challenge because standard stratification methods, which work well for single-label data like spam detection, are ill-suited here; ensuring the correct proportion of 'apples' across splits might disrupt the proportion of 'onions', for instance. To properly handle this complexity, we employed a specialized technique, Multilabel Stratified Shuffle Split. This algorithm is designed specifically for multi-label scenarios and works to preserve the proportional representation of each individual label, as well as combinations of labels, across the different data subsets. Using this method, we partitioned our data into training (70%), validation (15%), and testing (15%) sets, ensuring each split statistically mirrors the original dataset for more reliable model development and evaluation.

Model & Training

We fine-tuned a YOLOv11x model, chosen for its strong accuracy-speed balance. Training ran for 50 epochs using pre-trained weights adapted to our dataset.

Evaluation

We tracked mAP50 and mAP50–95 throughout training. The final model reached 0.85 mAP50 on the validation set, showing strong detection performance.

Visual tests confirmed the model could detect multiple items per image and handle background clutter and motion blur

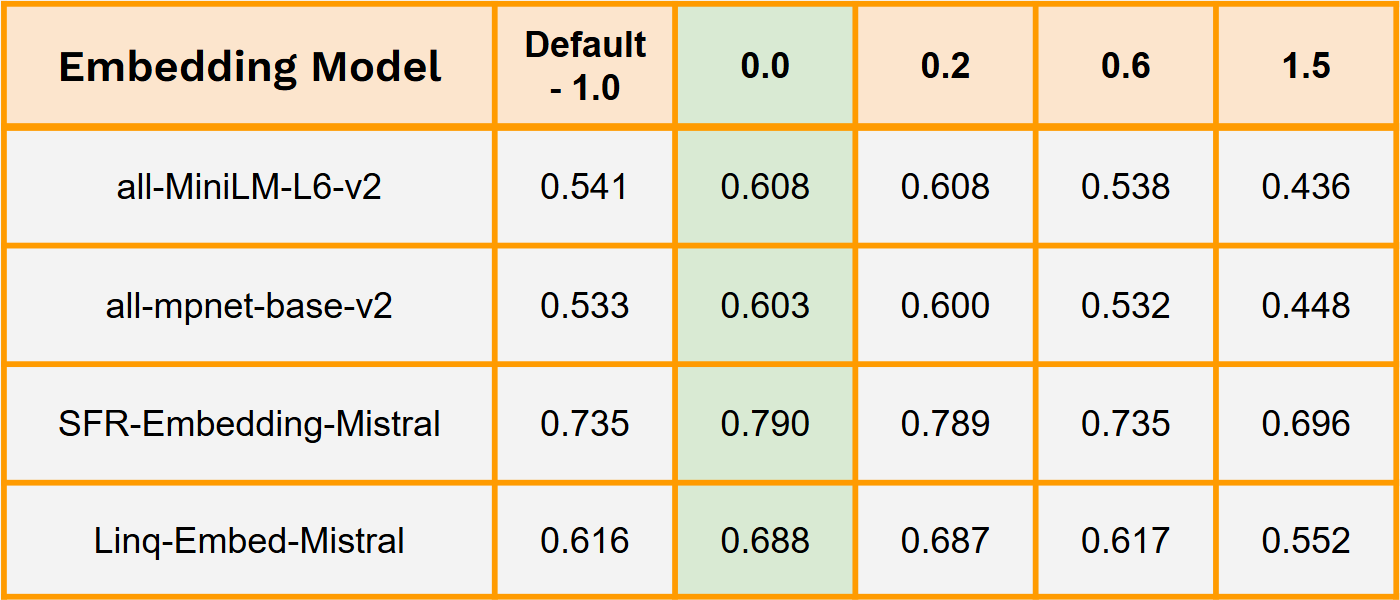

LLM Experiments

We leveraged our dataset of labelled grocery store images into an LLM dataset for benchmarking. We selected 200 random images from this dataset and prompted GPT-4o to provide short navigation guidance to the labeled item in the image. Using these GPT-4o responses as the gold standard, we experimented with the temperature of the LLM to see which setting provided the best, most accurate guidance. Using sentence embedding cosine similarity as a proxy for similarity, we found that a temperature of 0 yielded the best results from the vision LLM.

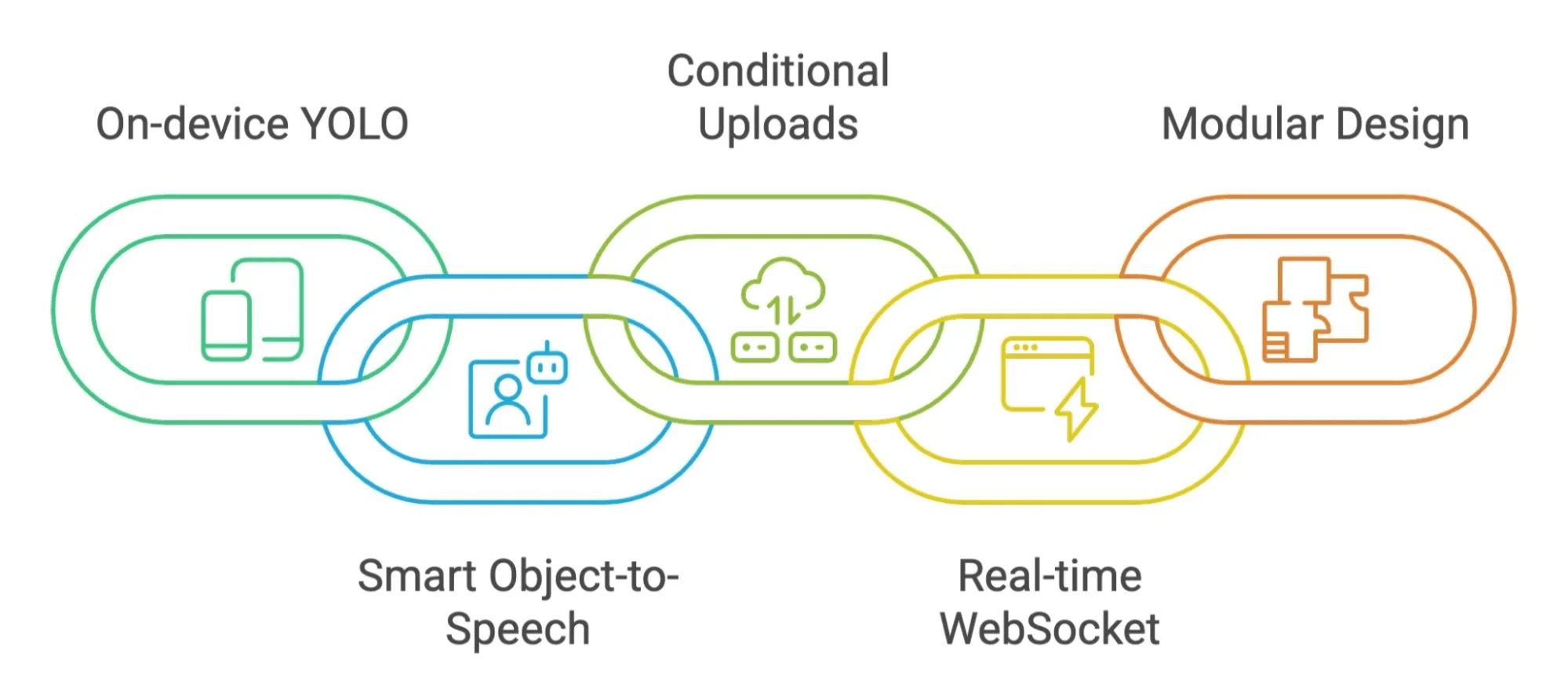

Technical Innovation

Our application leverages an on-device YOLO model, custom-trained to recognize only items from the user's grocery list. When a listed item is detected, it instantly triggers a smart pipeline, sending minimal data to an LLM to generate audible feedback. This feedback is delivered immediately back to the user via a responsive WebSocket connection managed with DynamoDB. This selective upload approach ensures cost efficiency, while the modular architecture allows for easy integration of different LLMs and future scaling to platforms like augmented reality.

Next Steps

App Development

Our development focuses on optimizing performance for speed and battery longevity, crucial for a seamless shopping experience. Accessibility is paramount, extending beyond basics to include robust screen reader integration, intuitive voice commands, and refined audio feedback. We are also refining the UI/UX for simplicity and clarity, reducing cognitive load for effortless interaction. Guided by rigorous testing and valuable feedback from the visually impaired community, our goal is to ensure the app is practical, empowering, and truly functional in real-world grocery environments.

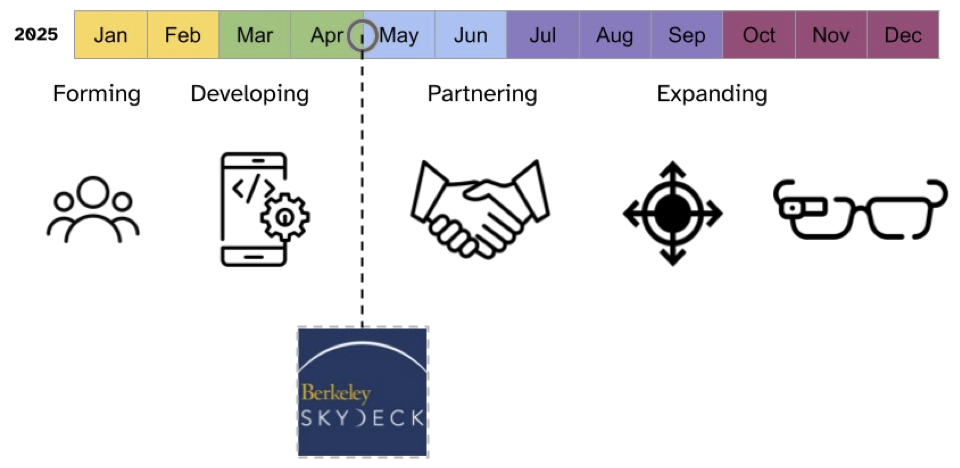

Product Roadmap

We were recently accepted into Berkeley SkyDeck's Pad-13 incubator program!

We intend to leverage the mentorship and resources over the next four months, focusing on two key pillars: deepening value through direct grocery store partnerships, and enhancing the user experience via hardware integration and market expansion. Our immediate priority is partnering with grocery chains to unlock precise in-store navigation and richer product data. Longer-term, we aim to expand into larger retailers like Target and Walmart and are evaluating smart glasses integration for a seamless, hands-free option, working to solidify perceive.ai as an essential tool for the visually impaired community.

Perceive AI.

We’re making grocery shopping accessible and independent for the visually impaired.